# Google colab setup.

import subprocess

import sys

def setup_google_colab_execution():

subprocess.run(["git", "clone", "https://github.com/bookingcom/uplift-modeling-for-marketing-personalization-tutorial"])

subprocess.run(["pip", "install", "-r", "uplift-modeling-for-marketing-personalization-tutorial/tutorial/requirements-colab.txt"])

subprocess.run(["cp", "uplift-modeling-for-marketing-personalization-tutorial/tutorial/notebooks/utils.py", "./"])

running_on_google_colab = 'google.colab' in sys.modules

if running_on_google_colab:

setup_google_colab_execution()

Explainability and Trust#

Building trust in your models is crucial for adoption by business stakeholders. One way to convey trust is to provide insight about your models’ outcomes.

Options to provides insights for causal models are:

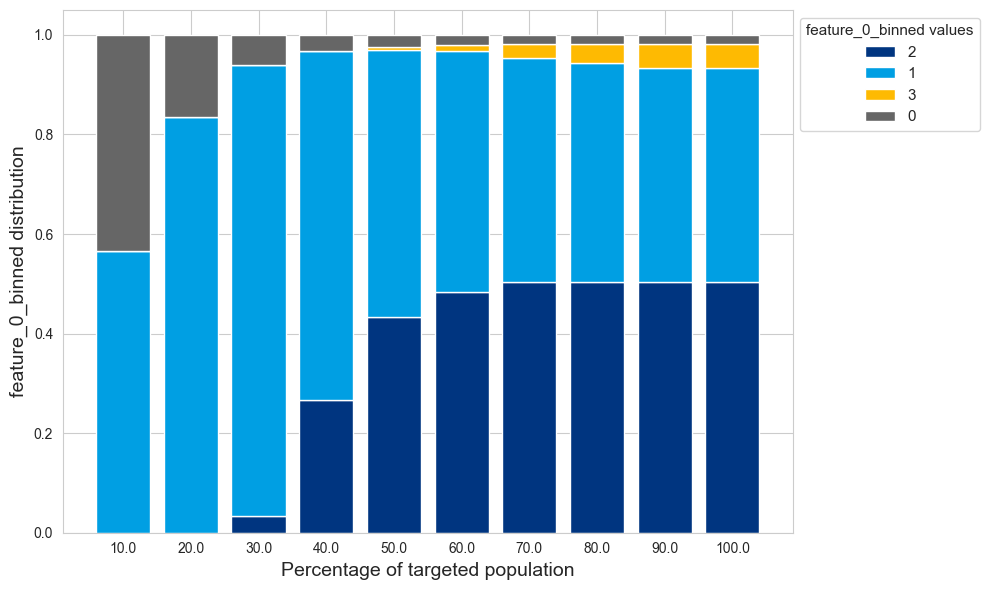

Feature vs Outcome distributions based on targeted population

SHAP using a surrogate model

2. SHAP: SHapley Additive exPlanations#

SHAP is a method derived from cooperative game theory. It is used to explain the output of machine learning models by assigning each feature an importance value for a particular prediction (Papers: SHAP, SHAP Tree explainer).

Background#

Shapley values’ are originally used in cooperative theory to fairly distribute the “payout” among players depending on their contribution to the overall game. In the context of machine learning, the “game” is the prediction task, and the “players” are the features in the dataset. The SHAP framework calculates the contribution of each feature to a prediction by considering all possible combinations of features and evaluating their marginal contribution.

How SHAP Works#

SHAP values provide a way to explain individual predictions by computing the contribution of each feature towards the prediction. This is done by comparing what the model predicts with and without the feature of interest, across all possible combinations of features. The result is a set of additive importance values that sum to the model’s output for a particular instance.

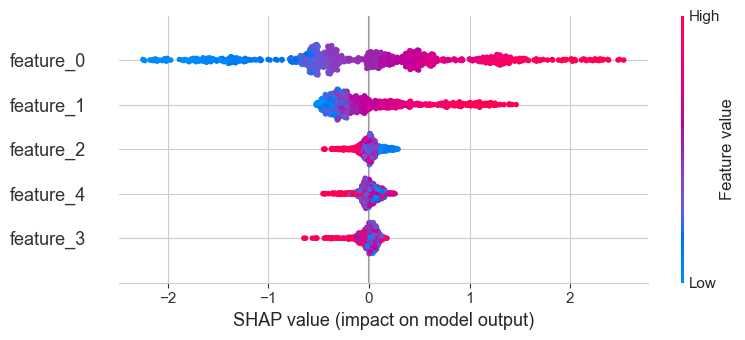

Local Interpretability: SHAP values for a single instance reveal why a specific prediction was made, giving insights into the model’s behavior for that particular case.

Global Interpretability: By aggregating SHAP values across many instances, one can understand the overall impact of each feature on the model’s predictions.

SHAP in Uplift Models#

SHAP values remain a powerful tool for explaining predictions in uplift models. They provide insights into feature importance and how each feature contributes to the predicted uplift.

SHAP works well with higher order models (T-learners, S-learners, X-learners, …), especially when using a surrogate model to approximate the treatment effect.

Higher-Order Models: SHAP Surrogates#

Higher-order models can be explained using a global surrogate model, allowing you to simplify the model’s explanation while retaining interpretability.

Example: SHAP with a Surrogate Model for an S-Learner#

SHAP values are typically used to explain predictions of a single model, not the difference between two model outputs, as in the case of S-Learner uplift predictions.

This is where a SHAP Surrogate Model comes into play: A surrogate model is a simpler or more interpretable model that approximates the behavior of a more complex model. For S-Learner explanations, we can use a surrogate model trained specifically to predict the treatment effect directly, rather than the outcome.

# The S-learner is trained on the outcome

pd.concat([X_train, y_train], axis=1).head()

| feature_0 | feature_1 | feature_2 | feature_3 | feature_4 | treatment | outcome | |

|---|---|---|---|---|---|---|---|

| 4227 | 0.057054 | -0.081551 | -1.297212 | 0.160135 | -0.583626 | 0 | 1.240913 |

| 4676 | -0.761419 | -0.478505 | 0.810051 | -1.501827 | 1.105807 | 0 | -1.086773 |

| 800 | -0.863494 | -0.031203 | 0.018017 | 0.472630 | -1.366858 | 0 | -0.858750 |

| 3671 | 1.838755 | 1.605690 | -0.875324 | 0.072802 | 1.710809 | 1 | 6.664757 |

| 4193 | 0.557739 | -0.107983 | 0.140180 | 0.897722 | 0.929312 | 0 | 0.750874 |

# The surrogate model will be trained on the treatment effect

X_test_surrogate = X_test.drop(columns=["treatment", "score"]).reset_index(drop=True)

treatment_effect_s_learner = pd.Series(treatment_effect_s_learner,

name="treatment_effect_s_learner")

pd.concat([X_test_surrogate, treatment_effect_s_learner], axis=1).head()

| feature_0 | feature_1 | feature_2 | feature_3 | feature_4 | treatment_effect_s_learner | |

|---|---|---|---|---|---|---|

| 0 | -2.241916 | 0.205456 | -0.775607 | -0.800335 | -0.498987 | -1.528042 |

| 1 | -0.719472 | -0.625107 | 0.943159 | -0.008871 | 1.133688 | -0.119800 |

| 2 | 1.126238 | -0.240398 | 0.767844 | -0.194585 | 0.033871 | 1.477655 |

| 3 | 0.783359 | -1.470490 | -1.262770 | -0.775875 | 0.078964 | 1.314942 |

| 4 | -1.173880 | -0.197938 | 2.067906 | -1.831682 | -1.051310 | 0.045547 |

import shap

from lightgbm import LGBMRegressor

# Train a surrogate model to predict the treatment effect using regression

surrogate_model = LGBMRegressor() # Changed to a regression model

surrogate_model.fit(X_test_surrogate, treatment_effect_s_learner)

# Use SHAP to explain the surrogate model

explainer = shap.TreeExplainer(surrogate_model)

shap_values = explainer.shap_values(X_test_surrogate)

# Plot SHAP summary plot for feature importance

shap.summary_plot(shap_values, X_test_surrogate, feature_names=X_test_surrogate.columns)

[LightGBM] [Info] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000299 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 1275

[LightGBM] [Info] Number of data points in the train set: 1000, number of used features: 5

[LightGBM] [Info] Start training from score 0.752923

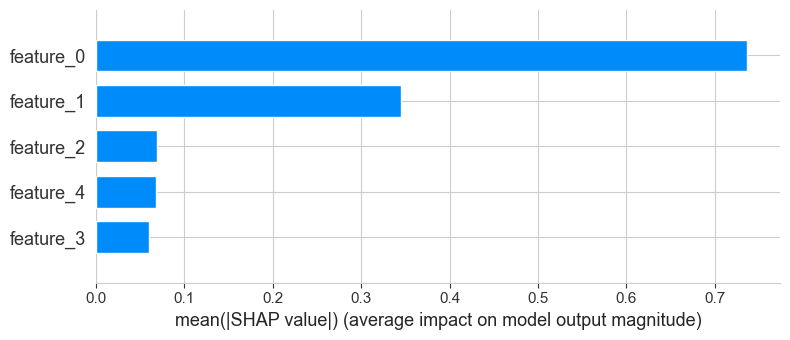

shap.summary_plot(shap_values, X_test_surrogate, feature_names=X_test_surrogate.columns, plot_type="bar")